Introduction to Containers

Containers – in simple words

In the cloud computing world, especially in AWS, there are three main categories of compute virtual machines (VMs), containers, and serverless. No one-size-fits-all compute service exists because it depends on your needs. It is important to understand what each option offers to build appropriate cloud architecture. Today, we will explore containers and how to run them.

Containers

The idea started long back with certain UNIX kernels having the ability to separate their process through isolation.

With the evolution of the open-source software community, container technology evolved too. Today, containers can host a variety of different workloads, including web applications, lift and shift migrations, distributed applications, and streamlining of development, test, and production environments.

Containers are also used as a solution to problems of traditional compute, including the issues of running applications reliably while moving compute environments.

A container is a standardised unit that packages your code and its dependencies. This package is designed to run reliably on any platform, because the container creates its own independent environment.

With containers, workloads can be carried from one place to another, such as from development to production or from on premises to the cloud.

Docker

Docker and containers are used synonymously. Docker is the most popular container runtime that simplifies the management of the entire operating system stack needed for container isolation, including networking and storage. Dockers create, package, deploy and run containers.

What is the difference between containers and virtual machines?

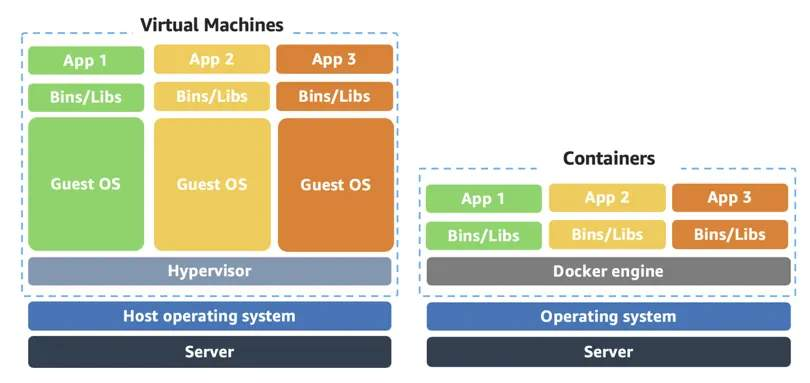

Containers share the same operating system and kernel as the host they exist on, whereas virtual machines contain their own operating system. Each virtual machine must maintain a copy of an operating system, which results in a degree of wasted resources. In contrast, a container is more lightweight. They spin up quicker, almost instantly. This difference in start-up time becomes instrumental when designing applications that need to scale quickly during input/output (I/O) bursts.

While containers can provide speed, virtual machines offer the full strength of an operating system

and more resources, like package installation, dedicated kernel, and more.

Orchestrate containers

In AWS, containers run on EC2 instances. We can have a large EC2 instance and run a few

containers on that instance. While running one instance is easy to manage, it lacks high

availability and scalability. But when the number of instances and containers increase, running

in multiple AS, the complexity to manage them increases too. If we want to manage compute

at a large scale, we must know the following:

How to place containers on EC2 instances

What happens if the containers fail?

What happens if the instance fails?

How to monitor deployments of containers

This coordination is handled by a container orchestration service. AWS offers two container orchestration services – Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS).

Elastic Container Service - ECS

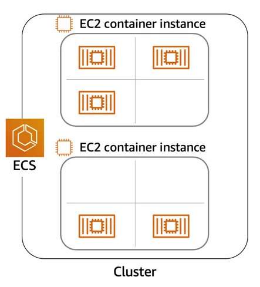

ECS is an end-to-end container orchestration service that helps to spin up new containers and manage them across a cluster of EC2 instances.

To run and manage containers, we need to install the ECS container agent on EC2 instances. This agent is open source and responsible for communicating to the Amazon ECS service about cluster management details. Agent can run on both Linux and Windows AMIs. An instance with the container agent installed is often called a container instance.

Once the Amazon ECS container instances are up and running, we can perform actions that include, but

are not limited to, launching and stopping containers, getting cluster state, scaling in

and out, scheduling the placement of containers across your cluster, assigning

permissions, and meeting availability requirements.

To prepare applications to run on Amazon ECS, we create a task definition. The task definition is a text file, in JSON format, that describes one or more containers. A task definition is like a blueprint that describes the resources we need to run a container, such as CPU, memory, ports, images, storage, and networking information.

Kubernetes

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services. By bringing software development and operations together by design, Kubernetes created a rapidly growing ecosystem that is very popular and well established.

If you already use Kubernetes, you can use Amazon EKS to orchestrate the workloads in the AWS Cloud. Amazon EKS is conceptually similar to Amazon ECS, but with the following differences:

An EC2 instance with the ECS agent installed and configured is called a container instance. In Amazon EKS, it is called a worker node.

An ECS container is called a task. In Amazon EKS, it is called a pod.

While Amazon ECS runs on AWS native technology, Amazon EKS runs on top of Kubernetes.

Should you have any queries, do let me know in the comments.

… HaPpY CoDiNg

Partha